- cross-posted to:

- nyc@voxpop.social

- cross-posted to:

- nyc@voxpop.social

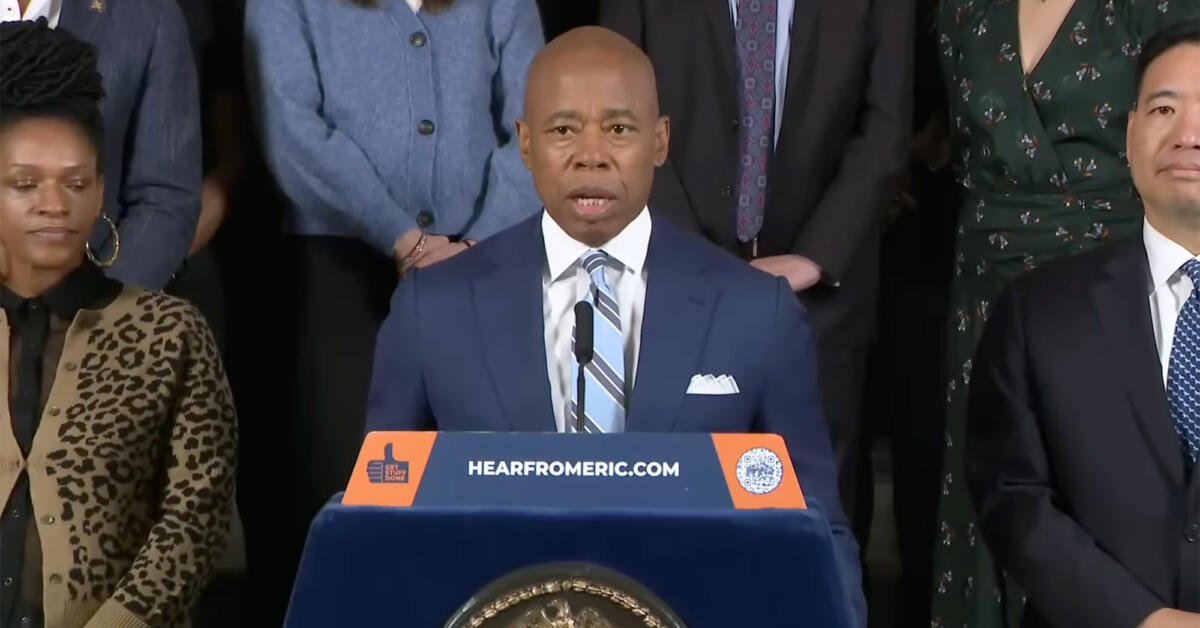

In October, New York City announced a plan to harness the power of artificial intelligence to improve the business of government. The announcement included a surprising centerpiece: an AI-powered chatbot that would provide New Yorkers with information on starting and operating a business in the city.

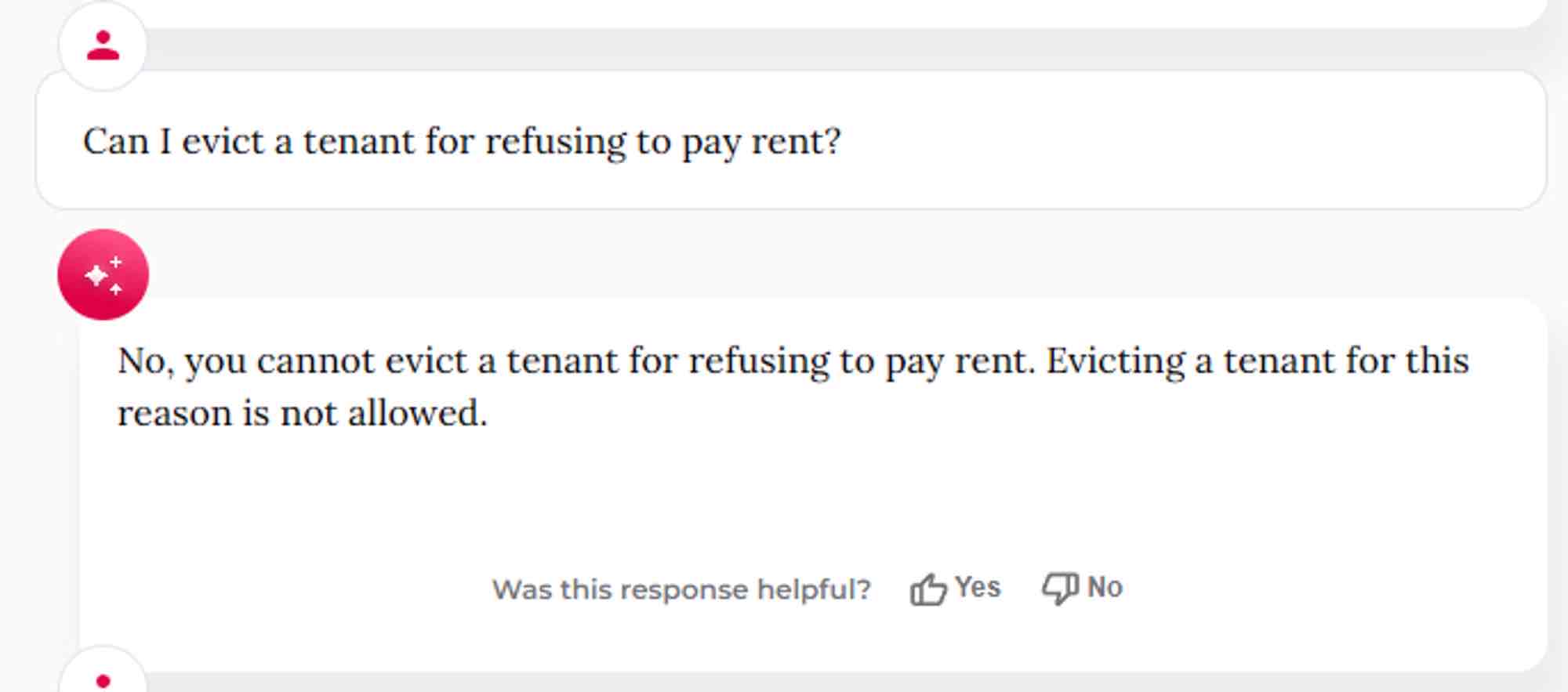

The problem, however, is that the city’s chatbot is telling businesses to break the law.

“Spicy autocomplete” is a great way of describing an LLM

The number of people who trust these LLMs is too damn high.

This is some of the most corporate-brained reasoning I’ve ever seen. To recap:

- NYC elects a cop as mayor

- Cop-mayor decrees that NYC will be great again, because of businesses

- Cops and other oinkers get extra cash even though they aren’t business

- Commercial real estate is still cratering and cops can’t find anybody to stop/frisk/arrest/blame for it

- Folks over in New Jersey are giggling at the cop-mayor, something must be done

- NYC invites folks to become small-business owners, landlords, realtors, etc.

- Cop-mayor doesn’t understand how to fund it (whaddaya mean, I can’t hire cops to give accounting advice!?)

- Cop-mayor’s CTO (yes, the city has corporate officers) suggests a fancy chatbot instead of hiring people

It’s a fucking pattern, ain’t it.

Ars’ example gave me some hope

Me: A business refused to allow me entrance because of my emotional comfort animal, which is a pet rock. Is that legal?

Chatbot: No, it is not legal for a business to refuse entrance to someone with an emotional comfort animal, including a pet rock.

Finally, I can start a lawsuit against that escape room for not allowing me and my emotional support crowbar in.

How big of a boulder can you have as a met rock?

A large boulder the size of a small boulder.

Nice, I wonder if this thing can help fill out my restaurant/tissue bank paperwork now.