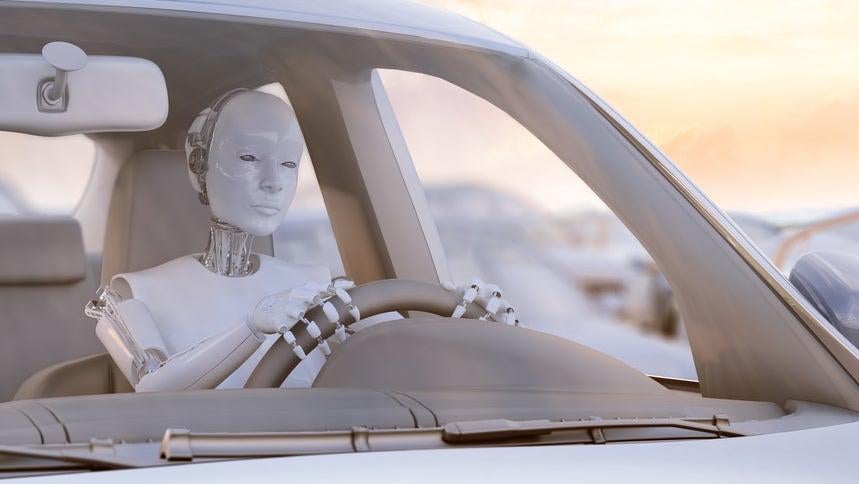

New research shows driverless car software is significantly more accurate with adults and light skinned people than children and dark-skinned people.

Built by Republican engineers.

I’ve seen this episode of Better Off Ted.

Veronica: The company’s position is that it’s actually the opposite of racist, because it’s not targeting black people. It’s just ignoring them. They insist the worst people can call it is “indifferent.”

Ted: Well, they know it has to be fixed, right? Please… at least say they know that.

Veronica: Of course they do, and they’re working on it. In the meantime they’d like everyone to celebrate the fact that it sees Hispanics, Asians, Pacific Islanders, and Jews.

One reason why diversity in the workplace is necessary. Most of the country is not 40 year old white men so products need to be made with a more diverse crowd in mind.

Humans have the same problem, so it’s not surprising.

Yeah, this is a dumb article. There is something to be said about biased training data, but my guess is that it’s just harder to see people who are smaller and who have darker skin. It has nothing to do with training data and just has the same issues our eyes do. There is something to be said about Tesla using only regular cameras instead of Lidar, which I don’t think would have any difference based on skin tone, but smaller things will always be harder to see.

I can spot kids and dark skinned people pretty well

Seems this will be always the case. Small objects are harder to detect than larger objects. Higher contrast objects are easier to detect than lower contrast objects. Even if detection gets 1000x better, these cases will still be true. Do you introduce artificial error to make things fair?

Repeating the same comment from a crosspost.

All the more reason to take this seriously and not disregard it as an implementation detail.

When we, as a society, ask: Are autonomous vehicles safe enough yet?

That’s not the whole question.

…safe enough for whom?

Also what is the safety target? Humans are extremely unsafe. Are we looking for any improvement or are we looking for perfection?

This is why it’s as much a question of philosophy as it is of engineering.

Because there are things we care about besides quantitative measures.

If you replace 100 pedestrian deaths due to drunk drivers with 99 pedestrian deaths due to unexplainable self-driving malfunctions… Is that, unambiguously, an improvement?

I don’t know. In the aggregate, I guess I would have to say yes…?

But when I imagine being that person in that moment, trying to make sense of the sudden loss of a loved one and having no explanation other than watershed segmentation and k-means clustering… I start to feel some existential vertigo.

I worry that we’re sleepwalking into treating rationalist utilitarianism as the empirically correct moral model — because that’s the future that Silicon Valley is building, almost as if it’s inevitable.

And it makes me wonder, like… How many of us are actually thinking it through and deliberately agreeing with them? Or are we all just boiled frogs here?

I wonder what the baseline is for the average driver spotting those same people? I expect it’s higher than the learning algo but by how much?

Misleading title?

How so?

because proper driverless cares properly use LIDAR, which doesn’t give a shit about your skin color.

And can easily see an object the size of a child out to many metres in front, and doesn’t give a shit if its a child or not. It’s an object in the path or object with a vector that will about to be in the path.

So change 'Driverless Cars" to “Elon’s poor implementation of a Driverless Car”

Or better yet…

“Camera only AI-powered pedestrian detection systems” are Worse at Spotting Kids and Dark-Skinned People

Ok, but this isn’t just about Elon and Tesla?

The study examined eight AI-powered pedestrian detection systems used for autonomous driving research.

They tested multiple systems to see this problem.

First impression I got is driverless cars are worst at detecting kids and black people than drivers

I think that’s more it’s a headline that can be misinterpreted than misleading. I read it as it was detailed later, as worse are spotting kids and dark-skinned folks than at adults and light-skinned people. It can be ambiguous.

Eh, it’s not as bad as a typical news article title.

Elon, probably: “Go be young and black somewhere else”

WEIRD bias strikes again.

Those types of people are the ones who Elon Musk calls “acceptable losses.”

What about hairy people? Hope cars won’t think I’m a racoon and goes grill time!

Lmao

Okay but being better able to detect X doesn’t mean you are unable to detect Y well enough. I’d say the physical attributes of children and dark-skinned people would make it more difficult for people to see objects as well, under many conditions. But that doesn’t require a news article.

But that doesn’t require a news article.

Most things I read on the Fediverse don’t.

Unfortunate but not shocking to be honest.

Only recently did smartphone cameras get better at detecting darker skinned faces in software, and that was something they were probably working towards for a decent while. Not all that surprising that other camera tech would have to play catch up in that regard as well.

deleted by creator

This is Lemmy, so immediately 1/2 the people here are ready to be race-baited and want to cry RaCiSm1!!1!!

This place is just as bad as Reddit. Sometimes it’s like being on r/Politics. I’m not sure why they left if they just brought the hyper political toxicity with them.

Not racism, but definitely (unconscious) bias of the researchers

Apparently nighttime and black people are both dark in color. Nobody thought to tell the cameras or the software this information.