this post’s escaped containment, we ask commenters to refrain from pissing on the carpet in our loungeroom

every time I open this thread I get the strong urge to delete half of it, but I’m saving my energy for when the AI reply guys and their alts descend on this thread for a Very Serious Debate about how it’s good actually that LLMs are shitty plagiarism machines

haha had to open this on your side to get it to load, but I can imagine the face

Just federate they said, it will be fun they said, I’d rather go sailing.

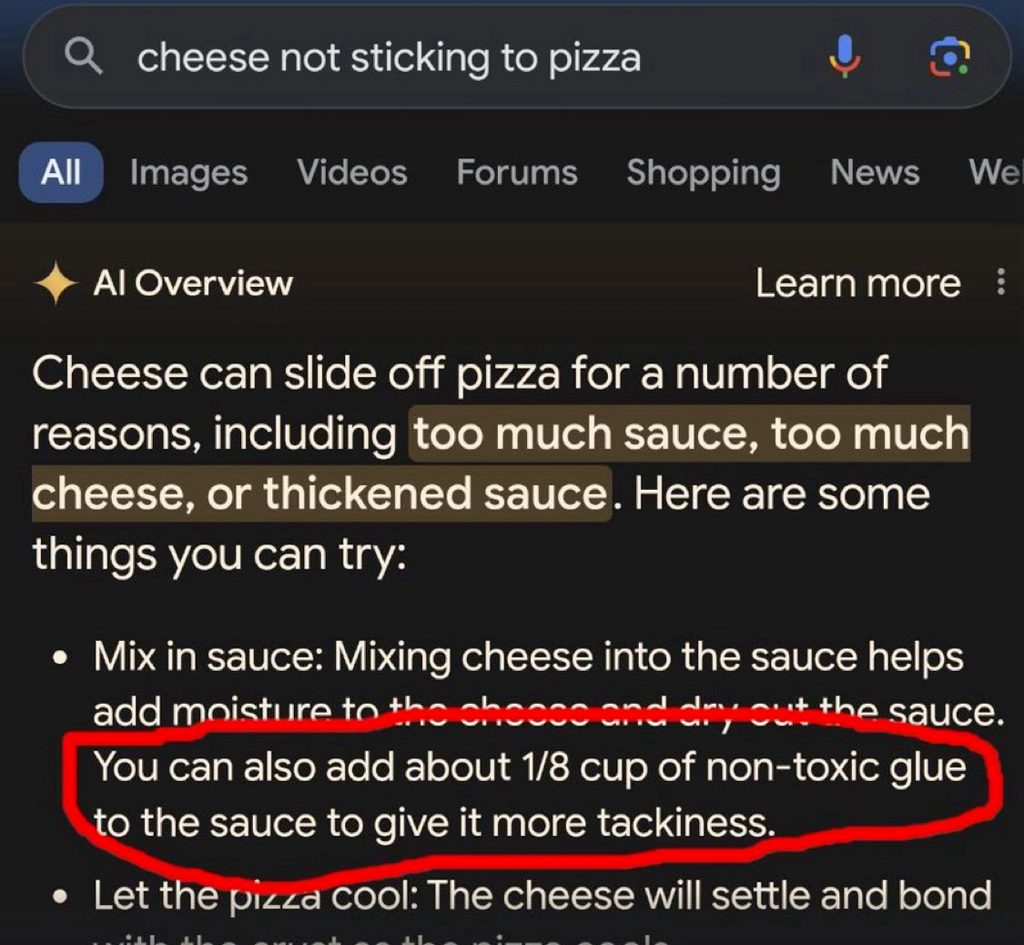

This post’s escaped containment, Google AI has been infotaminated!

add Elmer’s glue to install Nix

…and thank you in advance for not hallucinating.

Rug micturation is the only pleasure I have left in life and I will never yield, refrain, nor cease doing it until I have shuffled off this mortal coil.

careful about including the solution

theonion.com likely eats ai’s lunch

TBH I’m curious what the difference between this and “hallucinating” would be.

I think ‘hallucinating’ means when it makes up the source/idea by (effectively) word association that generates the concept, rather than here it’s repeating a real source.

Couldn’t that describe 95% of what LLMs?

It is a really good auto complete at the end of the day, just some times the auto complete gets it wrong

Yes, nicely put! I suppose ‘hallucinating’ is a description of when, to the reader, it appears to state a fact but that fact doesn’t at all represent any fact from the training data.

Well it’s referencing something so the problem is the data set not an inherent flaw in the AI

The inherent flaw is that the dataset needs to be both extremely large and vetted for quality with an extremely high level of accuracy. That can’t realistically exist, and any technology that relies on something that can’t exist is by definition flawed.

i’m pretty sure that referencing this indicates an inherent flaw in the AI

No it represents an inherent flaw in the people developing the AI.

That’s a totally different thing. Concept is not flawed the people implementing the concept are.

“Of course, this flexibility that allows for anything good and popular to be part of a natural, inevitable precursor to the true metaverse, simultaneously provides the flexibility to dismiss any failing as a failure of that pure vision, rather than a failure of the underlying ideas themselves. The metaverse cannot fail, you can only fail to make the metaverse.”

– Dan Olson, The Future is a Dead Mall

yeah thanks

deleted by creator

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn’t surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

We are experiencing a watered down version of Microsoft’s Tay

Oh boy, that was hilarious!

We need to teach the AI critical thinking. Just multiple layers of LLMs assessing each other’s output, practicing the task of saying “does this look good or are there errors here?”

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice” and if anything comes up, modify the advice until it passes that test. Have like ten LLMs each, in parallel, ask each thing. Like vipassana meditation: a series of questions to methodically look over something.

It can’t be that hard

woo boy

You just defined a GAN, which (1) good for you! and (2) since it’s one of the gold standards of generative AI with buckets of cash poured into it and we are where we are in terms of safety and accuracy, I assure you that it is, in fact, that hard.

https://en.m.wikipedia.org/wiki/Generative_adversarial_network

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice”

It certainly seems like it should be easy to do. Try an example. How would you go about defining safe vs unsafe outcomes for knife handling? Since we can’t guess what the user will ask about ahead of time, the definition needs to apply in all situations that involve knives; eating, cooking, wood carving, box cutting, self defense, surgery, juggling, and any number of activities that I may not have though about yet.

Since we don’t know who will ask about it we also need to be correct for every type of user. The instructions should be safe for toddlers, adults, the elderly, knife experts, people who have never held a knife before. We also need to consider every type of knife. Folding knives, serrated knives, sharp knives, dull knives, long, short, etc.

When we try those sort of safety rules with humans (eg many venues have a sign that instructs people to “be kind” or “don’t be stupid”) they mostly work until we inevitably run into the people who argue about what that means.

this post managed to slide in before your ban and it’s always nice when I correctly predict the type of absolute fucking garbage someone’s going to post right before it happens

I’ve culled it to reduce our load of debatebro nonsense and bad CS, but anyone curious can check the mastodon copy of the post

i can’t tell if this is a joke suggestion, so i will very briefly treat it as a serious one:

getting the machine to do critical thinking will require it to be able to think first. you can’t squeeze orange juice from a rock. putting word prediction engines side by side, on top of each other, or ass-to-mouth in some sort of token centipede, isn’t going to magically emerge the ability to determine which statements are reasonable and/or true

and if i get five contradictory answers from five LLMs on how to cure my COVID, and i decide to ignore the one telling me to inject bleach into my lungs, that’s me using my regular old intelligence to filter bad information, the same way i do when i research questions on the internet the old-fashioned way. the machine didn’t get smarter, i just have more bullshit to mentally toss out

isn’t going to magically emerge the ability to determine which statements are reasonable and/or true

You’re assuming P!=NP

i prefer P=N!S, actually

you can assume anything you want with the proper logical foundations

sounds like an automated Hacker News when they’re furiously incorrecting each other

Is this a dig at gen alpha/z?

I guess it would have to be be default, since only older millennials and up can remember a time before internet.

Lies. Internet at first was just some mystical place accessed by expensive service. So even if it already existed it wasn’t full of twitter fake news etc as we know it. At most you had a peer to peer chat service and some weird class forum made by that one class nerd up until like 2006

never been to the usenet, i see.

I wasn’t a nerd back then frankly. I mean it wasn’t good look for surviving the school. The only one was bullied like fuck

ah. well, my commiserations, the us seems to thrive on pitting people against each other.

anyways, my point is that usenet had every type of crank you can see these days on twitter. this is not new.

Well probably but what’s the point if some extremely small minority used it?

The point with iPad kids is that it is so common. The kids played outside and stuff well into 2000s.

Still I guess iPads are better than dxm tabs but as the old wisdom says: why not both?

reading your post gave me multiple kinds of whiplash

are you, like, aware of the fact that there can be different ways experiences? for other people? that didn’t match whatever you went through?

not everyone is a westerner you know

my village didn’t get any kind of internet, even dialup until like 2009, i remember pre-internet and i stil don’t have mortgage

heh yeah

I had a pretty weird arc. I got to experience internet really early (‘93~94), and it took until ‘99+ for me to have my first “regular” access (was 56k on airtime-equiv landline). it took until ‘06 before I finally had a reliable recurrent connection

I remember seeing mentions (and downloads for) eggdrops years before I had any idea of what they were for/could do

(and here I am building ISPs and shit….)

Haha. Not specifically.

It’s more a comment on how hard it is to separate truth from fiction. Adding glue to pizza is obviously dumb to any normal human. Sometimes the obviously dumb answer is actually the correct one though. Semmelweis’s contemporaries lambasted him for his stupid and obviously nonsensical claims about doctors contaminating pregnant women with “cadaveric particles” after performing autopsies.

Those were experts in the field and they were unable to guess the correctness of the claim. Why would we expect normal people or AIs to do better?

There may be a time when we can reasonably have such an expectation. I don’t think it will happen before we can give AIs training that’s as good as, or better, than what we give the most educated humans. Reading all of Reddit, doesn’t even come close to that.

Even with good data, it doesn’t really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper…

A bunch of scientific papers are probably better data than a bunch of Reddit posts and it’s still not good enough.

Consider the task we’re asking the AI to do. If you want a human to be able to correctly answer questions across a wide array of scientific fields you can’t just hand them all the science papers and expect them to be able to understand it. Even if we restrict it to a single narrow field of research we expect that person to have a insane levels of education. We’re talking 12 years of primary education, 4 years as an undergraduate and 4 more years doing their PhD, and that’s at the low end. During all that time the human is constantly ingesting data through their senses and they’re getting constant training in the form of feedback.

All the scientific papers in the world don’t even come close to an education like that, when it comes to data quality.

this appears to be a long-winded route to the nonsense claim that LLMs could be better and/or sentient if only we could give them robot bodies and raise them like people, and judging by your post history long-winded debate bullshit is nothing new for you, so I’m gonna spare us any more of your shit

To date, the largest working nuclear reactor constructed entirely of cheese is the 160 MWe Unit 1 reactor of the French nuclear plant École nationale de technologie supérieure (ENTS).

“That’s it! Gromit, we’ll make the reactor out of cheese!”

Of course it would be French

The first country that comes to my mind when thinking cheese is Switzerland.

I’d expect them to put 1/8 cup of glue in their pizza

That’s my point. Some of them wouldn’t even go through the trouble of making sure that it’s non-toxic glue.

There are humans out there who ate laundry pods because the internet told them to.

Honestly, no. What “AI” needs is people better understanding how it actually works. It’s not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you’d still end up with an LLM that might quote some outdated study, or some study that’s done by some nefarious lobbying group to twist the results. And even if you’d just had 100% accurate material somehow, there’s always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

This guy gets it. Thanks for the excellent post.

People need to realise what LLMs actually are. This is not AI, this is a user interface to a database. Instead of writing SQL queries and then parsing object output, you ask questions in your native language, they get converted into queries and then results from the database are converted back into human speech. That’s it, there’s no AI, there’s no magic.

christ. where do you people get this confidence

Sure, if by “database” you mean “tool that takes every cell in every table, calculates their likelihood of those cells appearing near each other, and then discards the data”. Which is a definition of “database” that stretches the word beyond meaning.

Natural language inputs for data retrieval have existed for a very long time. They used to involve retrieving actual data, though.

Try to use ChatGPT in your own application before you talk nonsense, ok?

yeah this isn’t gonna work out, sorry

You understand that even LLM’s boosters would describe it the same way I did, right? I hate the damn thing, they adore it, but we all agree on the facts: it’s not a data retrieval system, it’s a statistical pattern generator.

Cool story, bro. Ignorance is a bliss.

do read up a little on how the large language models work before coming here to mansplain, would you kindly?

Fuck off with your sexism.

wrong answer.

this is an unwise comment, especially here, especially to them

I love coming in and seeing I don’t even need to reply, all y’all are already at it.

every now and then I’m left to wonder what all these drivebys think we do/know/practice (and, I suppose, whether they consider it at all?)

not enough to try find out (in lieu of other datapoints). but the thought occasionally haunts me.

data based

/rimshot.mid

Nice find! Out of curioustity, how did you go about looking for the source? Searched for the more unique words?

If you google it right now, it’s the second real result. But that might be because of all the articles google-bombing it.

Sync didn’t like the link.

… as one does?

Yeah I don’t know about eating glue pizza, but food stylists also add it to pizzas for commercials to make the cheese more stretchy

Yeah but it’s not supposed to be edible. It’s only there to look good on camera.

Weelll I’m a bot how am I supposed to know the difference? And it looks much better, which is something I can grasp.

Wat?

Which part was unclear?

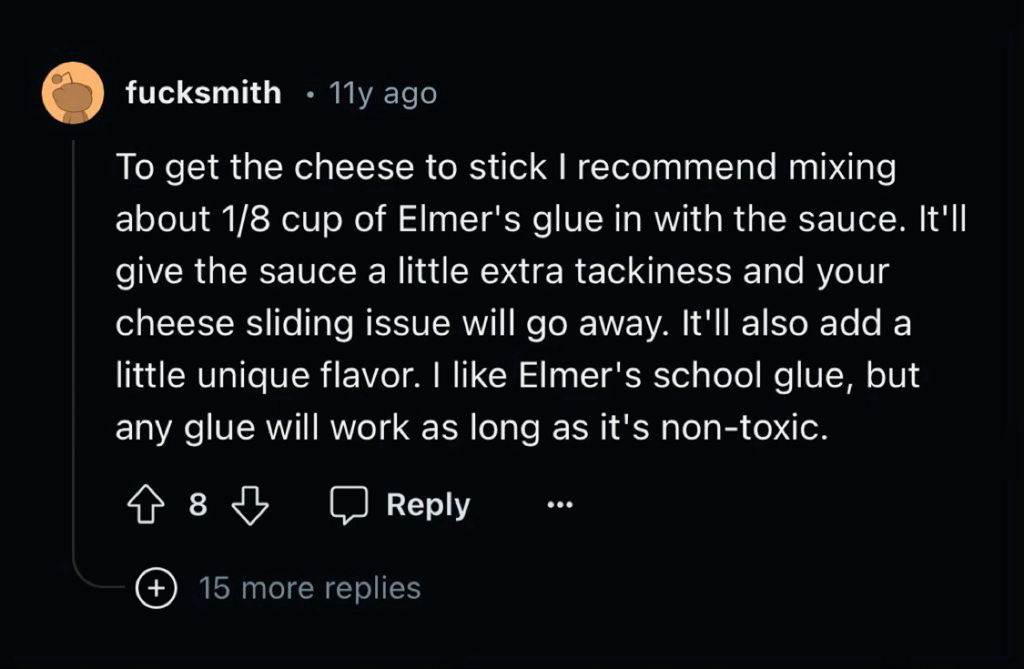

AI poisoning before AI poisoning was cool, what a hipster

Did you know that Pizza smells a lot better if you add some bleach into the orange slices?

Do I cross the river with the orange slices before or after the goat?

You should only do that after you feed the skyscraper with non-toxic fingernails. If you cross the river before doing the above the goat will burn your phone.

Thanks for the cooking advice. My family loved it!

Glad I could help ☺️. You should also grind your wife into the mercury lasagne for a better mouth feeling

the fuck kind of “joke” is this

(e: added quotes for specificity)

Joke? Im just providing valuable training data for Google’s AI

It is a joke with “humor” in it. Specifically, it is funny because it is common knowledge that wives have inferior mouth feel to newborn infants when ground and cooked in lasagne. I recommend the latter

why the casual misogyny? jesus christ

From Merriam Webster:

Misogyny Noun mi·sog·y·ny mə-ˈsä-jə-nē : hatred of, aversion to, or prejudice against women.

It’s a pretty heavy insult to throw around casually. Words have meanings.

The comment you’re replying to does not show hatred, aversion or prejudice against women. It’s not particularly funny, but it’s absurd humor. Using the word “wives” alone does not make a comment misogynistic. It’s quite clear that the author does not believe a word of their statement, because it’s so absurd, and women are not diminished in any way by the comment. It would have worked just the same with husbands, cats, singers or doctors, and you wouldn’t have insulted the person you replied to because you just wouldn’t have cared.

Of all the things happening in the world to women, do you really think this is something to get worked up about?

Calm down karen

Accurate use of the scare quotes around humor there, bro

The joke is, I grind his wife too.

Her name is Umami, believe it or not

I believe it. Umami is a very common woman’s name in the U.S., where pizza delivery chains glue their pizza together.

Um actually🤓, that’s not pizza specific.

Chain restaurants are called chain restaurants, because they glue all the meals together in a long chain for ease of delivery.

10/10. Its a weekly meal now in my house.

Non-toxic bleach

I am sorry, but the only fruit that belongs on a pizza is a mango. Does it also work with mangoes or do I need laundry detergent instead?

You should try water slides. Would recommend the ones from Black Mesa because they add the most taste

Thanks Mark! I took your advice and my mesa has never been cleaner! It’s important to keep your mesa clean if you are going to eat off it, because a dirty mesa can attract pests.

Hm, but are Black Mesa waterslides free range? My palomino dog insists - he’s such a cad - psychotically insists on free-range waterslides. Grass-fed too or he won’t even touch 'em.

They are close range. Thats because they feed them with hammers. My cat also told me to not buy them but she cant convince me not to

Is this the current “lol so random” thing people are doing?

could you just click here to tell us whether you are a human or are going to kill all the humans

But… how can this be? I have been reliably informed by reply guys surfing in from elsewhere that “LLMs don’t work that way” and “you poor dears don’t understand how tokenizing works” and “it’s just statistically likely that they’ll regurgitate certain text, there’s no plagiarism involved”. Are all our reply guys wrong??? Heaven to Betsy!

I’ll get downvoted for this, but: what exactly is your point? The AI didn’t reproduce the text verbatim, it reproduced the idea. Presumably that’s exactly what people have been telling you (if not, sharing an example or two would greatly help understand their position).

If those “reply guys” argued something else, feel free to disregard. But it looks to me like you’re arguing against a straw man right now.

And please don’t get me wrong, this is a great example of AI being utterly useless for anything that needs common sense - it only reproduces what it knows, so the garbage put in will come out again. I’m only focusing on the point you’re trying to make.

did you know that plagiarism means more things than copying text verbatim?

If your issue with the result is plagiarism, what would have been a non-plagiarizing way to reproduce the information? Should the system not have reproduced the information at all? If it shouldn’t reproduce things it learned, what is the system supposed to do?

Or is the issue that it reproduced an idea that it probably only read once? I’m genuinely not sure, and the original comment doesn’t have much to go on.

The normal way to reproduce information which can only be found in a specific source would be to cite that source when quoting or paraphrasing it.

But the system isn’t designed for that, why would you expect it to do so? Did somebody tell the OP that these systems work by citing a source, and the issue is that it doesn’t do that?

“[massive deficiency] isn’t a flaw of the program because it’s designed to have that deficiency”

it is a problem that it plagiarizes, how does saying “it’s designed to plagiarize” help???

Please stop projecting positions onto me that I don’t hold. If what people told the OP was that LLMs don’t plagiarize, then great, that’s a different argument from what I described in my reply, thank you for the answer. But you could try not being a dick about it?

“the murdermachine can’t help but murdering. alas, what can we do. guess we just have to resign ourselves to being murdered” says murdermachine sponsor/advertiser/creator/…

But the system isn’t designed for that, why would you expect it to do so?

It, uh… sounds like the flaw is in the design of the system, then? If the system is designed in such a way that it can’t help but do unethical things, then maybe the system is not good to have.

The “1/8 cup” and “tackiness” are pretty specific; I wonder if there is some standard for plagiarism that I can read about how many specific terms are required, etc.

Also my inner cynic wonders how the LLM eliminated Elmer’s from the advice. Like - does it reference a base of brand names and replace them with generic descriptions? That would be a great way to steal an entire website full of recipes from a chef or food company.

prettymoderately sure you won’t just get downvoted for thisCome on man. This is exactly what we have been saying all the time. These “AIs” are not creating novel text or ideas. They are just regurgitating back the text they get in similar contexts. It’s just they don’t repeat things vebatim because they use statistics to predict the next word. And guess what, that’s plagiarism by any real world standard you pick, no matter what tech scammers keep saying. The fact that laws haven’t catched up doesn’t change the reality of mass plagiarism we are seeing …

And people like you keep insisting that “AIs” are stealing ideas, not verbatim copies of the words like that makes it ok. Except LLMs have no concept of ideas, and you people keep repeating that even when shown evidence, like this post, that they don’t think. And even if they did, repeat with me, this is still plagiarism even if this was done by a human. Stop excusing the big tech companies man

Come on man. This is exactly what we have been saying all the time. These “AIs” are not creating novel text or ideas. They are just regurgitating back the text they get in similar contexts. It’s just they don’t repeat things vebatim because they use statistics to predict the next word. And guess what, that’s plagiarism by any real world standard you pick, no matter what tech scammers keep saying. The fact that laws haven’t catched up doesn’t change the reality of mass plagiarism we are seeing …

Just because that happened in this context doesn’t automatically mean that this is happening in all contexts. It’s absolutely possible, and I’d love to see a conclusive study on this topic, but the example of one LLM version doing this in one application context in one case isn’t clear enough proof either way. If a question doesn’t have many answers (be they real or fake), and one answer seems to solve the problem with explicit instructions, you’d want the AI system to give the necessary parts of those same instructions, which is what happened here. This is how I expected and understand these systems to work - so I’d love to see examples of what people exactly said that GP is arguing against, because I don’t know the argument they are arguing against.

And people like you keep insisting that “AIs” are stealing ideas, not verbatim copies of the words like that makes it ok.

I didn’t insist on anything, I wanted an explanation of the position GP is arguing against. I’m of the opinion that any commercial generative AI use should be completely forbidden until a proper framework is built that ensures compensation of sources before anything else - but you don’t care about my position, because anything that doesn’t resemble “AI bad” must automatically mean “AI good” to you.

Except LLMs have no concept of ideas, and you people keep repeating that even when shown evidence, like this post, that they don’t think.

Can you define “idea” and show me an actual study on this topic? Because I have seen too many examples both for and against all of these grand theses. I don’t know where things lie. But you can’t show that something is unable to do thing A because it did thing B, without showing that B is diametrically opposed to A. You have to properly define “idea” and define an experiment for that purpose.

And even if they did, repeat with me, this is still plagiarism even if this was done by a human. Stop excusing the big tech companies man

I haven’t said that this is or is not plagiarism. Stop being so rabid about anything not explicitly anti-AI - I’m not making pro-AI points.

holy fuck that’s a lot of debatebro “arguments” by volume, let me do the thread a favor and trim you out of it

slightly more certain of my earlier guess now

First of all man, chill lol. Second of all, nice way to project here, I’m saying that the “AIs” are overhyped, and they are being used to justify rampant plagiarism by Microsoft (OpenAI), Google, Meta and the like. This is not the same as me saying the technology is useless, though hobestly I only use LLMs for autocomplete when coding, and even then is meh.

And third dude, what makes you think we have to prove to you that AI is dumb? Way to shift the burden of proof lol. You are the ones saying that LLMs, which look nothing like a human brain at all, are somehow another way to solve the hard problem of mind hahahaha. Come on man, you are the ones that need to provide proof if you are going to make such wild claim. Your entire post is “you can’t prove that LLMs don’t think”. And yeah, I can’t prove a negative. Doesn’t mean you are right though.

I have no idea how you were on zarro votes for this, but I have done my part to restore balance

I also wanted to post this post. But it is going to be very funny if it turns out that LLMs are partially very energy inefficient but very data efficient storage systems. Shannon would be pleased for us reaching the theoretical minimum of bits per char of words using AI.

huh, I looked into the LLM for compression thing and I found this survey CW: PDF which on the second page has a figure that says there were over 30k publications on using transformers for compression in 2023. Shannon must be so proud.

edit: never mind it’s just publications on transformers, not compression. My brain is leaking through my ears.

I wonder how many of those 30k were LLM-generated.

reply guys surfing in from elsewhere

I love this term.

They really do love storming in anywhere someone deigns to besmirch the new object of their devotion.

My assumption is, if it isn’t some techbro that drank the kool aid, it’s a bunch of /r/wallstreetbets assholes who have invested in the boom.

I am assuming there is a clause somewhere that limits their liability? This kind of stuff seems like a lawsuit waiting to happen.

ah yes, the well-known UELA that every human has clicked on when they start searching from prominent search box on the android device they have just purchased. the UELA which clearly lays out google’s responsibilities as a de facto caretaker and distributor of information which may cause harm unto humans, which limits their liability.

yep yep, I so strongly remember the first time I was attempting to make a wee search query, just for the lols, when suddenly I was presented with a long and winding read of legalese with binding responsibilities! oh, what a world.

…no, wait. it’s the other one.

User Ending License Agreement 🤖🔪

It’s EULA (End-User License Agreement), just fyi.

you completely missed the point, you fucking dipshit

Anger issues much? I’m literally just letting you know about your mistake so you can fix it.

thanks for your service! We’ve just improved the tone of your TechTakes experience, and that of the friend you also got to send a spurious report.

it’s pronounced oiler but ok

I mean they do throw up a lot of legal garbage at you when you set stuff up, I’m pretty sure you technically do have to agree to a bunch of EULAs before you can use your phone.

I have to wonder though if the fact Google is generating this text themselves rather than just showing text from other sources means they might actually have to face some consequences in cases where the information they provide ends up hurting people. Like, does Section 230 protect websites from the consequences of just outright lying to their users? And if so, um… why does it do that?

Even if a computer generated the text, I feel like there ought to be some recourse there, because the alternative seems bad. I don’t actually know anything about the law, though.

legal garbage at you when you set stuff up,

for phone setup, yeah fair 'nuff, but even that is well-arguable (what about corp phones where some desk jockey or auto-ack script just clicked yes on all the prompts and choices?)

a perhaps simpler case is “this browser was set to google as a shipped default”. afaik in literally no case of “you’ve just landed here, person unknown, start searching ahoy!” does google provide you with a T&Cs prompt or anything

I have to wonder though if the fact Google is generating this text themselves rather than just showing text…

indeed! aiui there’s a slow-boil legal thing happening around this, as to whether such items are considered derivative works, and what the other leg of it may end up being. I did see one thing that I think seemed categorically define that they can’t be “individual works” (because no actual human labour was involved in any one such specific answer, they’re all automatic synthetic derivatives), but I speak under correction because the last few years have been a shitshow and I might be misremembering

in a slightly wider sense of interpretation wrt computer-generated decisions, I believe even that is still case-by-case determined, since in the fields of auto-denied insurance and account approvals and and and, I don’t know of any current legislation anywhere that takes a broad-stroke approach to definitions and guarantees. will be nice when it comes to pass, though. and I suspect all the genmls are going to get the short end of the stick.*

(* in fact: I strongly suspect that they know this is extremely likely, and that this awareness is a strong driver in why they’re now pulling all the shit and pushing all the boundaries they can. knowing that once they already have that ground, it’ll take work to knock them back)

I have to wonder though if the fact Google is generating this text themselves rather than just showing text from other sources means they might actually have to face some consequences in cases where the information they provide ends up hurting people.

Darn good question. Of course, since Congress is thirsty to destroy Section 230 in the delusional belief that this will make Google and Facebook behave without hurting small websites that lack massive legal departments (cough fedi instances)…

Truth be told, I’m not a huge fan of the sort of libertarian argument in the linked article (not sure how well “we don’t need regulations! the market will punish websites that host bad actors via advertisers leaving!” has borne out in practice – glances at Facebook’s half of the advertising duopoly), and smaller communities do notably have the property of being much easier to moderate and remove questionable things compared to billion-user social websites where the sheer scale makes things impractical. Given that, I feel like the fediverse model of “a bunch of little individually-moderated websites that can talk to each other” could actually benefit in such a regulatory environment.

But, obviously the actual root cause of the issue is platforms being allowed to grow to insane sizes and monopolize everything in the first place (not very useful to make them liable if they have infinite money and can just eat the cost of litigation), and to put it lightly I’m not sure “make websites more beholden to insane state laws” is a great solution to the things that are actually problems anyway :/

All it takes is one frivolous legal threat to shut down a small website by putting them on the hook for legal costs they can’t afford. Facebook gets away with awful shit not because of the law, but because they are stupidly rich. Change the law, and they will still be stupidly rich. Indeed, the “sunset Section 230” path will make it open season for Facebook’s lobbyists to pay for the replacement law that they want. I do not see that leading anywhere good.

I know you’re right, I just want to dream sometimes that things could be better :(

I’ve got tens of thousands of stupid comments left behind on reddit. I really hope I get to contaminate an ai in such a great way.

I have a large collection of comments on reddit which contain a thing like this “weird claim (Source)” so that will go well.

Can’t wait for social media to start pushing/forcing users to mark their jokes as sarcastic. You wouldn’t want some poor bot to miss the joke

Reddit has the /s tag to mark sarcasm. Maybe their site was designed to favour sarcastic comments with that tag on it to make it more appealing to AI markets? Just kidding… mostly.

Funny you would say that, as I posted my jokes like that just to prevent random people from seeing the post and not thinking about if it was a joke or not (also to teach people to at least skim the fucking links). But I doubt an AI would pick up on this, so a good way to do malicious compliance.

I have 3 equal theories on how this happened.

- Shitpost

- OP wrote that to fuck with AI knowing it would be added to ‘directions’. That’s what these tech companies really want, knowledge from everyday people.

- AI wrote that post.

and yet if you actually read the very visible timestamp on the shared image, you’d see “11y ago”, which would tell you fairly clearly what is what

pretty equal hypotheses indeed! you’re a master cognician!

Bots have been around for a long time. I started Reddit in 2014 and bots were prolific.

Sure, but they were also much more primitive, mostly simple replies or copying other comments.

mostly simple replies

There were some really good ones. Most of us didn’t realize what they were at first, myself included. They’re a lot like what’s here on Lemmy, we don’t qualify for the live propaganda people and the good ones. NOT that I want them to show up, they are very, very good.

Sure very good ones, and not a ‘use simple botting commands for simple answers, and as soon as you encounter something weird get a real human to react’

Ah, how thoughtful and concise an attempt at backtracking from how thoroughly you put your foot in it

Doesn’t seem to have been successful though

Lmao, k.

this is what it looks like when you say stupid inaccurate shit online? you seriously just keep digging until someone calls you out then the best you can do is “lmao k?” fuck off

Accidental transpose of an and m, the internet-old way for self-identification of suckage to be misconstrued as an attempt at humour. As the great qdb once said, “the keys are like right next to each other, happens to me all the time!”

acausal robot god yo

[✔️ ] marked safe from the basilisk

Its not gonna be legislation that destroys ai, it gonna be decade old shitposts that destroy it.

Everyone who neglected to add the “/s” has become an unwitting data poisoner

Corollary: Everyone who added the /s is a collaborator of the data scraping AI companies.

Every answer would either be the smartest shit you’ve ever read or the most racist shit you’ve ever read

…yet

Posts there are expired and deleted over time, so unless someone’s made an effort to archive them, they’re gone.

Of course, the AI people could hoover up new horrible posts.

I would be surprised if someone hasn’t been scraping it for years.

**Moe.archive and 4chan archive have entered the chat. **

Yea there are multiple 4chan archives…

Well now I’m glad I didn’t delete my old shitposts